Acoustic Music Similarity Analysis (Research project 2006-2007)

Motivated by a desire for better music recommendations, I built a fully automatic approach for recommending music based on “how it sounds,” using musical characteristics like instrumentation, tempo, and mood. The goal for this system is to give completely objective recommendations, instead of relying on collaborative filtering (i.e. “Listeners Also Bought…” on iTunes) which is easily skewed by popular songs and fails to account for new music.

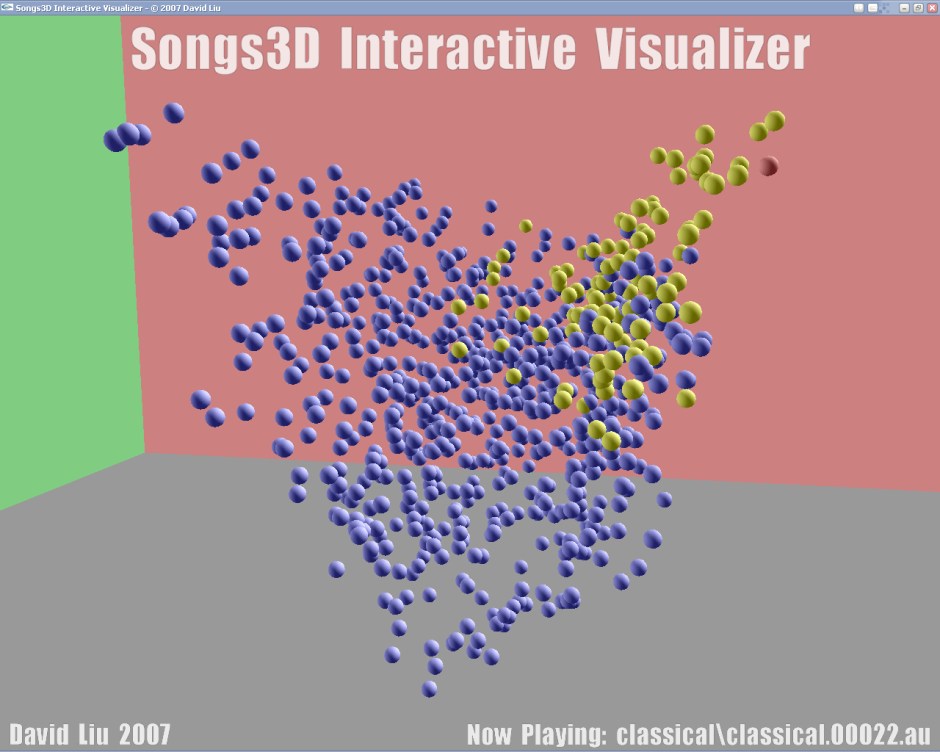

I wrote software to extract the frequency distributions of songs, run feature analysis, and arrange the songs in a space where similar songs are closer together.

I competed with this project at the Intel ISEF, California State Science Fair, and Synopsys Silicon Valley Science Fair. Here’s a video from Intel of my live demo at ISEF 2007.

Tech Specs: The most successful technique I found was extracting MFCC features from short windows, clustering them into a feature histogram, and using the Earth Mover’s Distance to compare these signatures. Then, drawing on techniques from spectral clustering, I arranged the songs in a transformed feature space. This was a novel approach that separated songs into distinct groups, and produced significantly better retrieval results as measured by accuracy in genre matching.

Comments